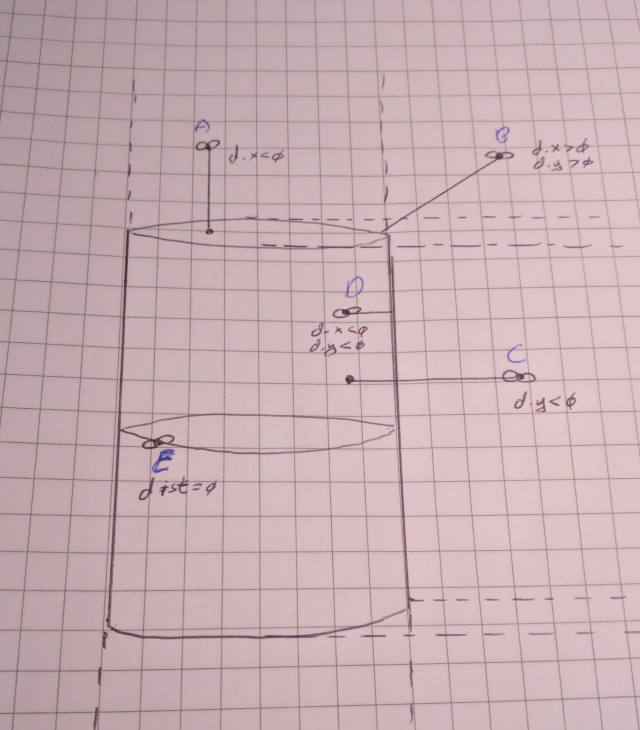

A very useful operation in fragment shader development is repeating a coordinate system. It’s also quite neat since we’re able to draw several shapes without the use of a loop. When working in 3D and raymarching it’s extra awesome because we can fake the repetition of infinite shapes into a space. Here I discuss one method of performing this repetition task.

First let’s create a simple framework:

float sdCircle(vec2 p, float r){

return length(p)-r;

}

void main(){

vec2 p = (gl_FragCoord.xy/u_res)-0.5;

float i = step(sdCircle(p, .5), 0.);

gl_FragColor = vec4(vec3(i),1);

}

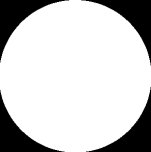

We define a vector for each fragment that ranges from -.5 from the bottom left corner to +.5 to the top right with a zero vector in the middle of the canvas.

We define a function to calculate the distance from p to the center of the circle, using step will make the intensity variable (i) either 0 or 1.

It yields a happy circle:

Let’s say we want to repeat this circle 4 times. We’ll need to create a vector that repeats across the canvas several times. To help with this, we’ll use mod().

Now the span of the current coordinate system is 1 (-.5 to +.5) so if we want 4 cells, we should use mod(p, .25) ….or better yet mod(p, 1/4) which is slightly more intuitive. We’ll also need to adjust the radius of the circles so they fit in the new cell sizes. Let’s make them half the cell size.

float cellSz = 1./4.; float r = cellSz/2.; p = mod(p, vec2(cellSz)); float i = step(sdCircle(p, r), 0.);

It gives us:

Wait, what’s this? Why are the circles cut off and no longer happy?

Well, remember how we used mod? mod returns positive values–in our case: 0 to .25. This means that for the variable p, the bottom left corner is (0,0) and the very top is (.25, .25)

What we actually want is ‘centered’ ranges from vec2(-.125) to vec2(.125), right? But wait! We already did this! At the start of main we divided the fragCoord by resolution then subtracted .5. We just need to do something similar. In this case, subtract half of whatever the cell size is.

p = mod(p, vec2(cellSz))-vec2(0.5*cellSz);

Now our circles are happy again!