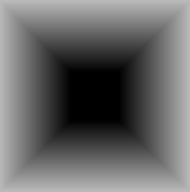

For someone who is fairly obsessed with pixel art, it isn’t a surprise I really like voxel rendering, too.

I feel I finally reached the point where I have enough knowledge about shader code that I can start to deconstruct voxel shaders to understand them. So I began to do just that. I started with a simple shader on ShaderToy, took it apart and put it back together. I renamed variables, made some changes, simplified some things, etc.

Here is the shader on shadertoy. The original source from where I got the code is posted at the top of the shader.

https://www.shadertoy.com/view/4lKyDW

Okay, so let’s dig in.

Define some constants. Nothing too special here. MAX_STEPS is used for raymarching. Grass and Dirt are colors obviously.

const float MAX_STEPS = 1000.;

const float GrassHeight = 0.8;

const vec3 Grass = vec3(.2, 1, 0);

const vec3 Dirt = vec3(.5,.25, 0);

Just a main(), no magic yet…

void mainImage(out vec4 fragColor, in vec2 fragCoord) {

Okay, we’re raymarching right? That means, we’ll need to define the ray start position. We can set x to whatever. It doesn’t really matter. The y component needs to be a large enough value so we can see the terrain. Z is set to simulation time so that the flyover seems animated.

vec3 rayOrigin = vec3(0., 12, iDate.w*5.);

Normalize the screenspace coords. We’ll need this in a couple places later on.

vec2 pnt = fragCoord.xy/iResolution.xy*2.-1.;

The ray direction. Unlike the ray origin, the ray direction varies slightly for each fragment–spreading out from -1 to 1 on the xy plane. Note z points positive 1.

vec3 rayDir = vec3(pnt, 1.);

Define the final color that we’ll assign to this particular fragment.

vec3 color;

The purpose of depth is to scale the ray direction vector.

float depth = 0.;

Okay, we reached the raymarching loop.

for(float i = .0; i < MAX_STEPS; ++i) {

Since the top part of the screen space is empty, it makes sense to add a check early on to exit if we know the ray won’t actually hit anything. Of course this might need to be adjusted if the rayOrigin changes.

if(pnt.y > 0.){

break;

}

Pretty standard raymarching stuff. We start with the origin and scale the ray direction a bit longer every iteration. The first iteration depth is zero, so we’d just be using the rayOrigin.

vec3 p = rayOrigin + (rayDir * depth);

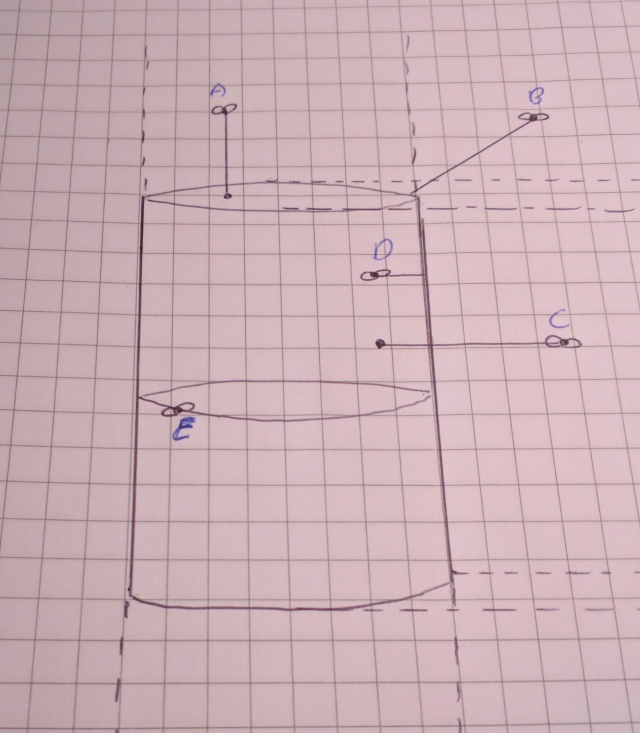

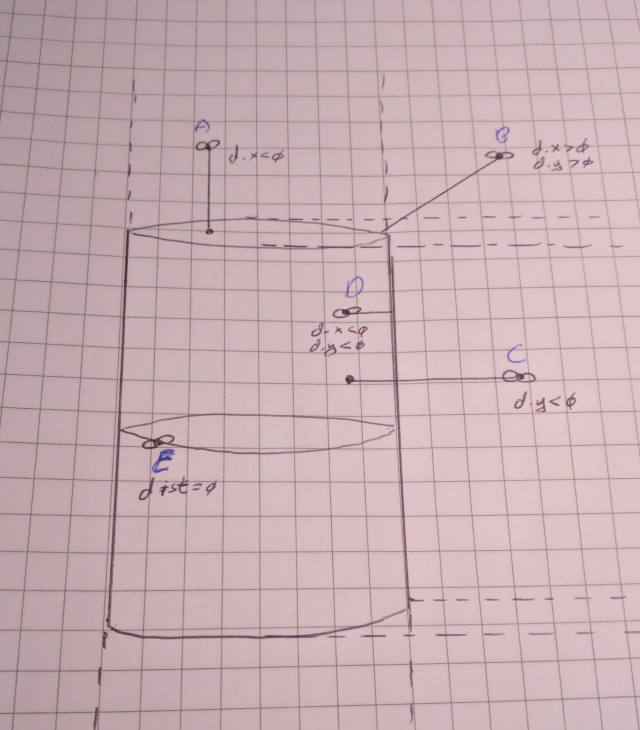

Okay, now we finally come to something that’s necessarily the ‘voxel’ part of the rendering. By flooring p we are ‘snapping’ the calculated raymarched point to the nearest 3D box/grid/cell.

Scaling the floored value by a smaller number will yield a higher ‘resolution’.

vec3 flr = floor(p) * 0.25;

Okay, let’s take our attention to the wave-like pattern of the terrain. There are peaks and there are valleys. These are of course generated by using cos() and sin(). The waves created are the interactions/addition of the functions along the space.

This is where we decide if we are going to set the color of the fragment. If the floored y value is less then the cos()+sin(), then we want to color the fragment. Otherwise it means the y is higher so keep iterating.

if(flr.y < cos(flr.z) + sin(flr.x)){

frct = fract(p);

if(frct.y > GrassHeight){

color = Grass;

}

else{

color = Dirt;

}

If we hit a voxel and set the color, there’s no need to keep iterating. Break out of the loop.

break;

}// close the if

Depth is used to scale the ray direction, so we increment it a tiny bit every iteration. Since i ranges from 0 to 1000 and since we are scaling i here, the ray direction will scale from .0 to .1

depth += i * (.1/MAX_STEPS);

}

We need to add some lighting, even if really fake to give the illusion of distance. It also helps to hide some of the aliasing that happens at the very far points in the terrain.

If we exited the loop early on, depth will be a relatively small value which means the fragments will end up being bright.

The farther it took to hit something, the darker that fragment will be. Play around with this value for some interesting lighting effects.

color *= 1./(depth/5.);

fragColor = vec4(color, 1);

}

If you understood most of this try substituting the cos() and sin() functions with noise values. It will make some really fun variation in the terrain.